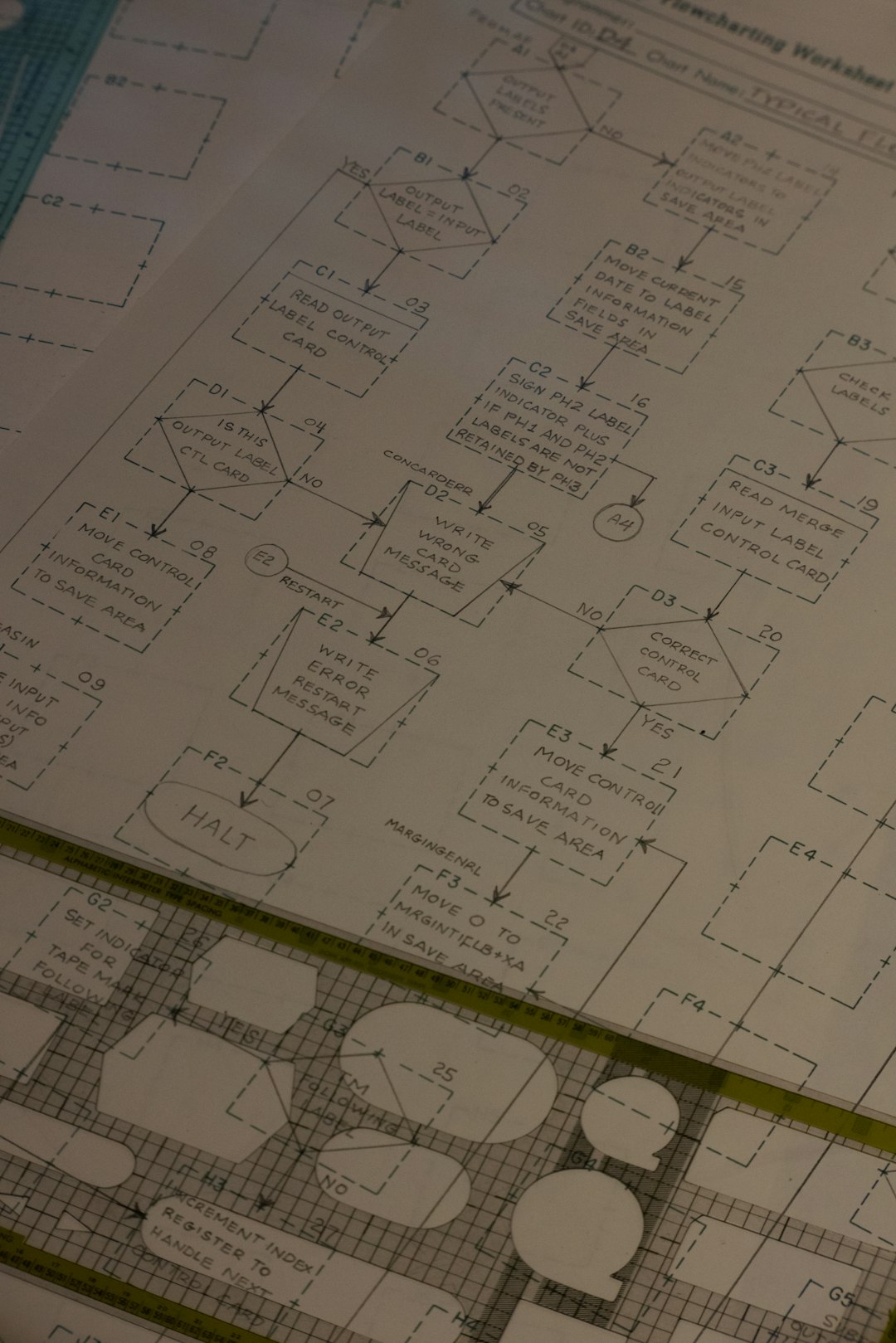

In the age of artificial intelligence and massive data consumption, vector databases have emerged as a game-changing technology. Unlike traditional databases that store structured data in rows and columns, vector databases are optimized for storing and querying high-dimensional vector embeddings. These embeddings are representations derived from machine learning models—typically used in areas such as semantic search, recommendation systems, computer vision, and natural language processing. Evaluating these databases involves careful consideration of three critical factors: cost, consistency, and scale.

Understanding Vector Databases

Before diving into metrics for evaluation, it’s essential to understand what vector databases are and why they are becoming indispensable. As machine learning models extract features from unstructured data, they generate numerical representations—vectors—that embody the essentials of that data. For instance, a processed image or a paragraph of text can be transformed into a 256 or 768-dimensional vector. The goal of a vector database is to allow efficient and accurate similarity searches over this high-dimensional space.

This capability drives innovative applications such as:

- Semantic search engines that understand context and meaning

- Personalized content recommendations

- Biometric recognition (e.g., facial recognition)

- Natural language understanding and generation

However, the performance and usability of vector databases hinge on how well they can manage trade-offs across three fundamental dimensions: cost, consistency, and scale.

Evaluating Cost: The Price of Speed and Precision

One of the most pressing concerns when selecting a vector database is cost. While many modern vector databases offer open-source versions, enterprise-grade solutions can quickly become expensive due to storage, compute, and networking overhead—especially when dealing with billions of vectors and frequent updates.

Key cost components include:

- Storage Cost: Vector data is inherently large. For example, storing 100 million vectors of 768 dimensions in float32 requires over 280 GB of raw storage.

- Compute Cost: Indexing and searching vectors involves a lot of fine-tuned operations on GPUs or high-performance CPUs.

- Networking Cost: In distributed environments, vector search often requires communication among multiple nodes, which can increase latency and bandwidth usage.

- Operational Overhead: Constant maintenance of indexes, backups, and scaling operations adds further to the total cost of ownership.

Different databases take different approaches to manage these costs. For example:

- Milvus leverages GPU-accelerated searches and data compression techniques to reduce cost while maintaining performance.

- Pinecone offers a managed vector database and abstracts away infrastructure, trading flexibility for simplicity and pricing transparency.

- Weaviate allows hybrid search with APIs and vectorization built into the engine, offering more utility per dollar spent for developers who need tight semantic integration.

Ultimately, choosing a vector database based on cost requires weighing performance needs against infrastructure budget. Efficient storage strategies and CPU/GPU utilization significantly affect long-term ROI.

Consistency: The Overlooked Dimension

While cost and scale often dominate the discussion around vector search, consistency is a critical yet often overlooked dimension. In traditional databases, consistency refers to the state of data being synchronized and accurate across various nodes or after concurrent operations. In vector databases, it takes on additional complexities because updates and deletions must maintain the integrity of the search index.

There are two types of consistency to be aware of:

- Index Consistency: Vector databases rely on approximate nearest neighbor (ANN) algorithms which require building and maintaining indexes. When data is added or deleted, these indexes must update reliably without corrupting search results.

- Query Consistency: In distributed systems, a query hitting different nodes might yield slightly different results if indexes are not synchronized in real-time. This can lead to eventual consistency, where results improve over time—but this is not always acceptable in mission-critical applications.

To illustrate, a retail recommendation engine that serves millions of queries per hour cannot afford inconsistent results between a user’s search on mobile versus web. A slight variation in results due to asynchronous updates can erode trust and relevance.

Some solutions to improve consistency include:

- Periodic batch indexing operations

- Commit logs to track and replay vector insertions or deletions

- Versioned indexes allowing queries to target only consistent snapshots

When evaluating a vector database, ask: how quickly do inserts, deletes, and updates become available for query? and how resilient is the indexing mechanism under concurrent updates?

Scale: From Thousands to Billions

Scalability is perhaps the most marketed metric of vector databases—but also the most nuanced to evaluate across real-world conditions. Vectors at scale pose challenges for both horizontal scaling (distributing across machines) and query latency.

Here are some scalability challenges to consider:

- Index Size: Vector database indexes like HNSW or IVF grow non-linearly with the number of vectors, impacting memory and storage.

- Latency vs Accuracy: Larger datasets often force approximate search methods that trade off precision for speed. Business needs should determine how much error is acceptable in results.

- Throughput: Systems should be benchmarked not just on single-query latency but also on how many concurrent searches they can handle per second.

- Distributed Performance: Sharding and partitioning strategies significantly affect database behavior under load. Poor distribution causes hotspots that bottleneck performance.

Some vector databases offer automatic sharding and replication (e.g., Pinecone, Vespa), while others require custom infrastructure management (e.g., Faiss + custom wrappers).

For an organization planning to scale from millions to billions of vectors, it’s critical to evaluate:

- Available sharding and replication strategies

- Support for cloud-native auto-scaling

- Query latency at peak loads

- Hardware compatibility (CPU, GPU, FPGA)

Scalability is not just about storage size—it’s also about maintaining acceptable performance and consistency at scale. Be wary of benchmarks that show only ideal conditions without concurrent queries or low-latency requirements.

Conclusion

As the demand for intelligent search and context-aware applications grows, vector databases will continue to shape the data infrastructure of the future. Evaluating the right solution requires a strategic balance between three pillars:

- Cost: Tightly manage infrastructure and resource usage to align with budgetary constraints

- Consistency: Ensure indexing and query integrity across updates and distributed environments

- Scale: Plan for growth in both storage and performance with smart sharding and indexing strategies

Each use case—from real-time recommendation engines to federated search—has unique performance and reliability goals. There is no one-size-fits-all vector database, but understanding trade-offs and matching them to business needs significantly improves decision-making.

Whether you’re prototyping an AI-powered product or scaling an enterprise-scale search system, thoughtful evaluation of your vector infrastructure can prevent future roadblocks and enable rapid innovation.