Maintaining a healthy and visible website in search engines often means juggling a delicate balance between security, performance, and SEO practices. While strengthening the security of a WordPress-driven website, one of the plugins I installed ended up severely disrupting how XML sitemaps were generated — in turn breaking how search engines discovered and indexed new content. This article details how that happened, the consequences it caused, and the regeneration workflow I implemented to recover our search visibility.

TL;DR

A commonly used security plugin disrupted my website’s XML sitemap generation, effectively blocking indexing submission to Google and Bing. As a result, major content updates didn’t make it into search results. After diagnosing the issue, I adjusted firewall settings and fully regenerated sitemaps using a different plugin. This restored visibility and search engine indexing within days.

Understanding the Problem

It all started when I noticed a gradual drop in organic traffic over the course of about two weeks. I hadn’t changed any major on-page SEO strategies, so I suspected an indexing issue. Diving into Google Search Console confirmed my suspicions — the indexing report flagged errors tied to the sitemap file. Specifically, the sitemap was returning 403 (Forbidden) and 404 errors across multiple endpoints.

The XML sitemap, for context, is crucial because it helps search engines like Google and Bing discover, crawl, and prioritize the content of a website. If the sitemap is inaccessible, new content isn’t indexed promptly, and older pages might be ignored altogether.

This problem was particularly damaging because we had just rolled out several new landing pages and blog content. Without indexing, they weren’t appearing in search engine results at all.

Root Cause: Overly Aggressive Security Plugin

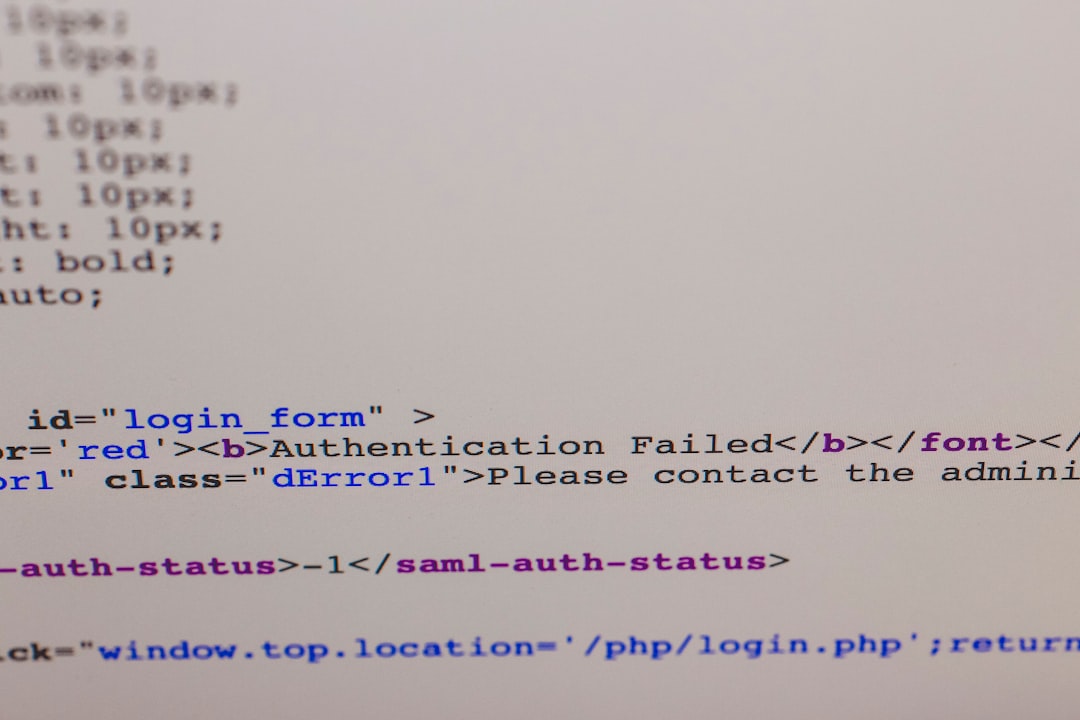

My immediate goal was to find out why the sitemap had become inaccessible. After checking server logs and running a CURL trace, I confirmed that requests made to /wp-sitemap.xml and other dynamic sitemap endpoints were being blocked at the application firewall layer, rather than the server or CDN.

After deactivating plugins one at a time, I found the culprit: a popular WordPress security plugin (whose name I’ll spare here). This plugin had implemented a “bot hardening” feature that mistakenly flagged sitemap generation and search engine crawlers as suspicious behavior. As a result, it:

- Blocked XML sitemap requests from Googlebot and Bingbot

- Denied non-authenticated cron jobs that update sitemaps

- Disabled REST API endpoints needed by certain SEO plugins to dynamically build sitemaps

Even though the plugin was effective in blocking malicious login attempts and brute-force attacks, its lack of integration awareness with SEO tools had major SEO-related consequences.

The Cost of Broken Indexing

In just over ten days, our new blog content went completely unindexed. Traffic to our landing pages dropped by over 25%, and impressions in Search Console hit flatlines for URLs that were previously ranking in the top 10 results.

When search engines can’t access the sitemap, they rely solely on internal and external links to discover content. This fails when URLs aren’t deeply linked or are recently published. Worst of all, when Google detects a faulty or missing sitemap too often, it deprioritizes recrawling of your domain — a signal that can take weeks to recover from.

Identifying a Path to Recovery

Once the root cause was found, I took a structured approach to resolving it step-by-step. Here’s what the process looked like:

1. Disable the Conflicting Features

Within the security plugin’s configuration, I selectively turned off features known to interfere with search engine bots. This included “Advanced Bot Protection” and REST API restriction rules.

2. Whitelist Search Engine User Agents

The plugin had a limited whitelist option, so I manually added trusted user agents like Googlebot, Bingbot, and AhrefsBot. Additionally, I enabled UA string logging to catch any misflagged requests in the future.

3. Use a Different XML Sitemap Generator

I transitioned away from the built-in WordPress sitemap system—which was tightly coupled with the disabled REST API—and installed Yoast SEO, which has a dedicated sitemap generation module less reliant on WordPress’s core features.

After activating Yoast, it immediately regenerated /sitemap_index.xml and granular content-type sitemaps like /post-sitemap.xml, /page-sitemap.xml, and customized taxonomies.

4. Submit New Sitemaps to Search Console

The newly generated sitemaps were submitted both to Google Search Console and Bing Webmaster Tools. I validated that each endpoint returned a 200 OK response from different geographic locations (especially important if Cloudflare or similar caching layer is used).

5. Monitor Indexing Using Inspect Tool

The Search Console URL Inspection Tool became vital during the recovery phase. I tested several affected URLs and confirmed that Google was able to queue them back into the indexing pipeline. The error messages disappeared after two days.

Lessons Learned and Prevention Strategies

This ordeal provided some hard-won lessons that should serve any site admin, SEO specialist, or WordPress developer well.

- Don’t install plugins without compatibility research. Always check how new security tools interact with caching systems and search engine bots.

- Use well-maintained, SEO-aware plugins. Not all plugins are built with SEO in mind. Choosing reliable tools like Yoast or Rank Math can prevent breakdowns.

- Automate sitemap health checks. A simple daily script that checks for 200 status codes on critical SEO files (sitemaps, robots.txt) can catch issues early.

- Keep a proactive indexing watchlist. Track indexing status of priority URLs weekly using something like Screaming Frog, Ahrefs, or manual inspection.

The Outcome: Restored Visibility

Three days after implementing the sitemap regeneration workflow and fixing firewall rules, Search Console started reindexing previously missing URLs. By the following week, our new content began showing up in search results again, and traffic steadily rebounded to baseline levels.

Seeing a full recovery reinforced the value of maintaining a close watch on the intersection of technical SEO and web security. In environments where changes happen fast, unintentional side effects like broken sitemaps can go unnoticed and cause avoidable losses.

Conclusion

What started as a routine security enhancement ended up costing me crucial search visibility, simply due to the sitemap system being blocked under the radar. This experience served as a wake-up call about how essential it is to consider SEO implications during technical changes — especially when they involve firewalls, plugins, or permission modeling.

Fortunately, with careful auditing, the use of SEO-centric tools, and a solid recovery workflow, it’s possible to fix broken site visibility quickly before deeper ranking losses take root. Always treat your XML sitemap as a monitored asset, and ensure that every automated safeguard you introduce doesn’t become an unexpected barrier to discovery.