As organizations adopt increasingly sophisticated artificial intelligence (AI) solutions, effectively managing and forecasting the costs associated with AI workloads has become a critical aspect of financial operations (FinOps). While training large models generally receives most of the attention, operationalizing AI—especially inference at scale—can introduce unpredictable cost complexities. This article explores how teams can apply practical FinOps principles to accurately forecast AI inference costs, bringing data-driven accountability and predictability to modern AI workloads.

Understanding AI Inference Costs

Inference refers to the process of running data through a trained AI model to generate predictions or insights. Unlike training, which is typically a one-time or infrequent cost, inference is often continuous and tied directly to user activity or business events. It can be deployed in various environments such as:

- Edge devices – smartphones, sensors, autonomous vehicles

- Cloud-based APIs – ML Ops platforms, customer-facing applications

- Batch processing systems – fraud detection, anomaly analysis

Each of these deployment contexts can lead to significantly different cost dynamics. Understanding and forecasting these expenditures is vital for effective financial management.

Key Cost Drivers in AI Inference

Before diving into forecasting techniques, companies need a solid grasp of what contributes to AI inference costs. Typical cost-driving factors include:

- Model size and complexity: Larger models require more compute and memory resources per inference.

- Request volume and frequency: The higher the number of inference requests, the higher the runtime cost.

- Hardware and infrastructure: Running inference on high-end GPUs versus cost-optimized CPUs creates dramatically different cost profiles.

- Deployment environment: Pricing differs across public clouds, on-premises hardware, and edge deployments.

- Concurrency and latency requirements: Low-latency or real-time processing often requires provisioning more compute resources, increasing costs.

Building a FinOps Framework for Inference

To manage and forecast inference costs effectively, organizations should adopt a structured FinOps approach. A practical FinOps framework for AI inference focuses on collaboration between engineering, data science, and finance teams to ensure cost-awareness is embedded into every part of the inference pipeline.

1. Establish Baseline Metrics

Start by capturing current usage and cost metrics. These include:

- Total inference requests per day/week/month

- Average compute time per inference

- Cost per inference by model and endpoint

- Resource utilization (e.g., GPU utilization, memory consumption)

This baseline data enables teams to understand where their current cost centers lie and serves as the foundation for forecasting future trends.

2. Categorize Inference Workloads

Not all inference workloads are created equal. Group them based on factors such as:

- Business criticality: Is the model supporting customer-facing functionality or internal analytics?

- Performance requirements: Real-time vs. batch processing

- Scaling behavior: Elastic workloads vs. steady state

With these categories in place, finance and engineering teams can assign cost optimization strategies more effectively and create tailored forecasts for each cluster of workloads.

3. Apply Usage Forecasting Models

Once inference workloads are segmented, apply forecasting techniques to project their future use—and by extension, their cost. Common techniques include:

- Linear regression: For systems with steady growth

- Time series models (e.g., ARIMA, Prophet): For workloads with seasonality patterns

- Machine learning-based forecasting: If historical usage data has nonlinear growth trends

Accurate usage projections empower finance teams to predict monthly inference expenses and support budgeting cycles with greater confidence.

4. Map Usage to Cost Calendars

Translate projected usage into financial forecasts using real-world pricing structures from infrastructure providers. This involves:

- Mapping compute time (e.g., seconds of GPU use) to hourly pricing

- Additive costs like storage, data egress, or licensing fees

- Tiered pricing models and volume discounts

Budget granularity should match the operational context—for example, per model endpoint, per region, or per customer segment.

Optimizing and Controlling Inference Spend

Forecasting is not only about anticipating costs but also about identifying opportunities to control them. Effective FinOps practices include:

1. Right-Sizing Models

Reducing model complexity without sacrificing accuracy can have direct cost implications. Employ techniques such as:

- Model pruning

- Quantization

- Knowledge distillation

These approaches can accelerate inference time and reduce resource consumption, leading to measurable savings.

2. Auto-scaling Infrastructure

Leverage cloud-native services to scale compute resources dynamically in response to demand. This prevents over-provisioning and reduces idle runtime, which is a common source of waste in AI infrastructure.

3. Implementing Budgets and Alerts

Set cost thresholds and automated alerts within your cloud or FinOps tools to detect anomalies or usage spikes early. This proactive measure avoids budget overruns and allows teams to adjust workloads in near real-time.

How to Communicate Inference Costs to the Business

One of FinOps’ key tenets is ensuring that technical costs are visible and understandable to business stakeholders. To that end:

- Translate costs into business KPIs: For example, “Cost per fraud detection” or “Cost per personalized recommendation.”

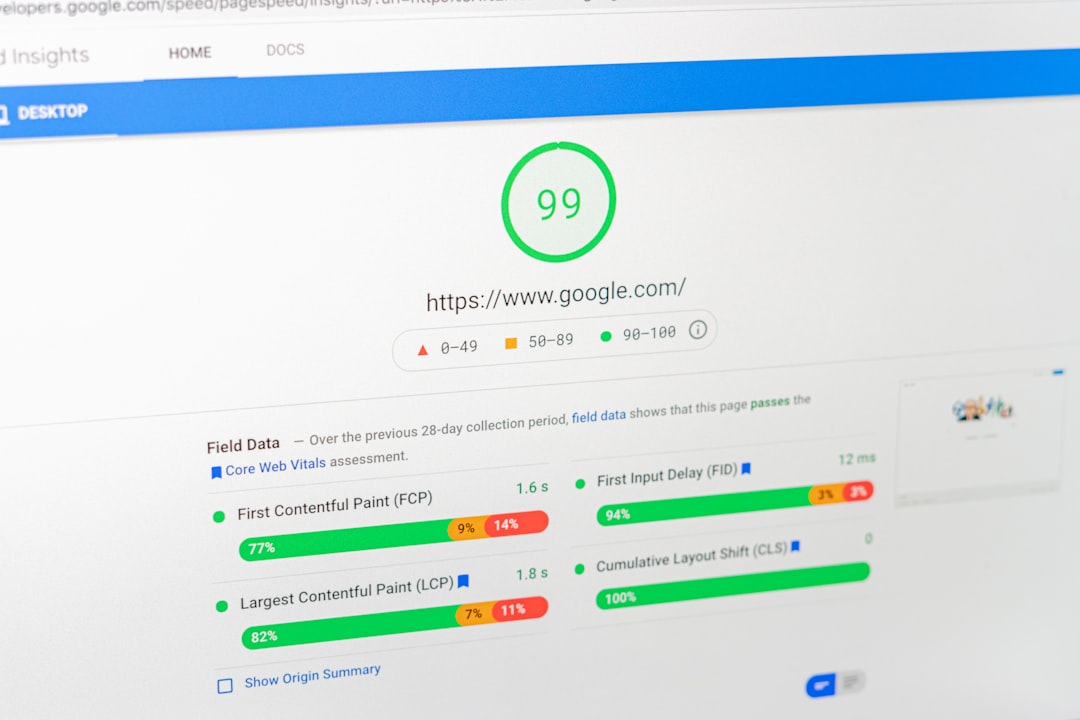

- Create dashboards: Offer real-time visibility into model performance, utilization, and cost so teams can track efficiency and justify expenses.

- Run ROI analyses: Compare the cost of inference with the resulting business value, such as increased revenue or cost savings.

Common Challenges in Forecasting Inference Costs

Despite best intentions, several challenges can hinder accurate forecasting:

- Rapid scaling: Viral application usage can dramatically change inference demand overnight, skewing forecasts.

- Model sprawl: Multiple versions of models in production adds complexity to cost attribution.

- Shadow AI deployments: Teams deploying inference endpoints without centralized oversight can create visibility gaps.

Addressing these challenges requires mature AI governance, centralized model registries, and collaboration between engineering, DevOps, and finance.

Tools and Platforms for AI FinOps

Several tools support tracking and forecasting AI inference costs with increasing precision. Worth evaluating are:

- Cloud-native tools: AWS Cost Explorer, Azure Cost Management, or Google Cloud Billing reports

- ML Ops platforms: Weights & Biases, MLflow, or Seldon Core with built-in logging and monitoring

- FinOps aggregators: CloudHealth, Apptio Cloudability, or Spot.io that offer AI workload tagging and analysis

Choose tools that integrate well with your CI/CD pipeline and observability systems to drive real-time insights into inference economics.

Conclusion

AI inference is a crucial component of delivering intelligent applications at scale—but without careful cost forecasting, organizations risk overspending or under-budgeting. By implementing a thoughtful FinOps approach, companies can align technical deployment with financial discipline, ensuring sustainable AI innovation.

Forecasting AI inference costs isn’t a one-time task; it’s a continuous process powered by accurate data, interdepartmental communication, disciplined budgeting, and the right tools. As AI matures in the enterprise, so too must cost governance—making FinOps a strategic imperative in the age of artificial intelligence.